Security teams deploy SIEM platforms expecting immediate threat detection and rapid incident response. Reality delivers something different. Organizations discover that poor log management practices drain budgets, overwhelm analysts, and create dangerous security blind spots.

The financial impact extends far beyond storage costs. Teams waste hours hunting through irrelevant data during critical investigations. Compliance violations stack up when retention policies fail. Alert fatigue forces analysts to ignore genuine threats buried in noise.

Why Strategic SIEM Log Management Matters Now

Modern enterprises generate enormous log volumes from cloud infrastructure, SaaS applications, endpoints, and network devices. Dell, for example, has reported generating over 125 billion log events per day—a scale that turns unmanaged logging into a liability.

At the same time, AI is reshaping the SIEM log management landscape. Correlation, detection, and even response workflows are increasingly automated. But here’s the catch: AI is only as good as the logs it’s fed—and how well it understands your environment.

Too often, organizations pump vast amounts of undifferentiated data into AI engines, assuming more data means better detection. In reality, irrelevant or poorly structured logs degrade accuracy, trigger false positives, and drown teams in noise. Worse, most AI models aren’t tailored to your infrastructure, business processes, or threat landscape. They miss what matters and flag what doesn’t.

This over-reliance on automation is also eroding core analyst skills. Manual threat hunting and log-based compromise assessment are becoming rare in SOCs. Many teams only realize how brittle their detection capabilities are after a breach—when the automation breaks down, and no one remembers how to work without it.

That’s why strategic SIEM log management is more critical than ever. It’s not just about data hygiene or compliance. It’s about giving both your AI systems and your analysts the right signal, the right context, and the ability to act with speed and confidence.

Poor log management doesn’t just cost you storage—it costs you visibility, detection, and ultimately, control.

6 Mistakes of SIEM Log Management

Mistake #1: Drowning in Undifferentiated Log Data

The Equal Treatment Trap

Organizations configure SIEM platforms to collect every available log source without strategic prioritization. This approach treats verbose application debug messages the same as authentication failures or lateral movement indicators. Teams drown in data while missing critical security signals.

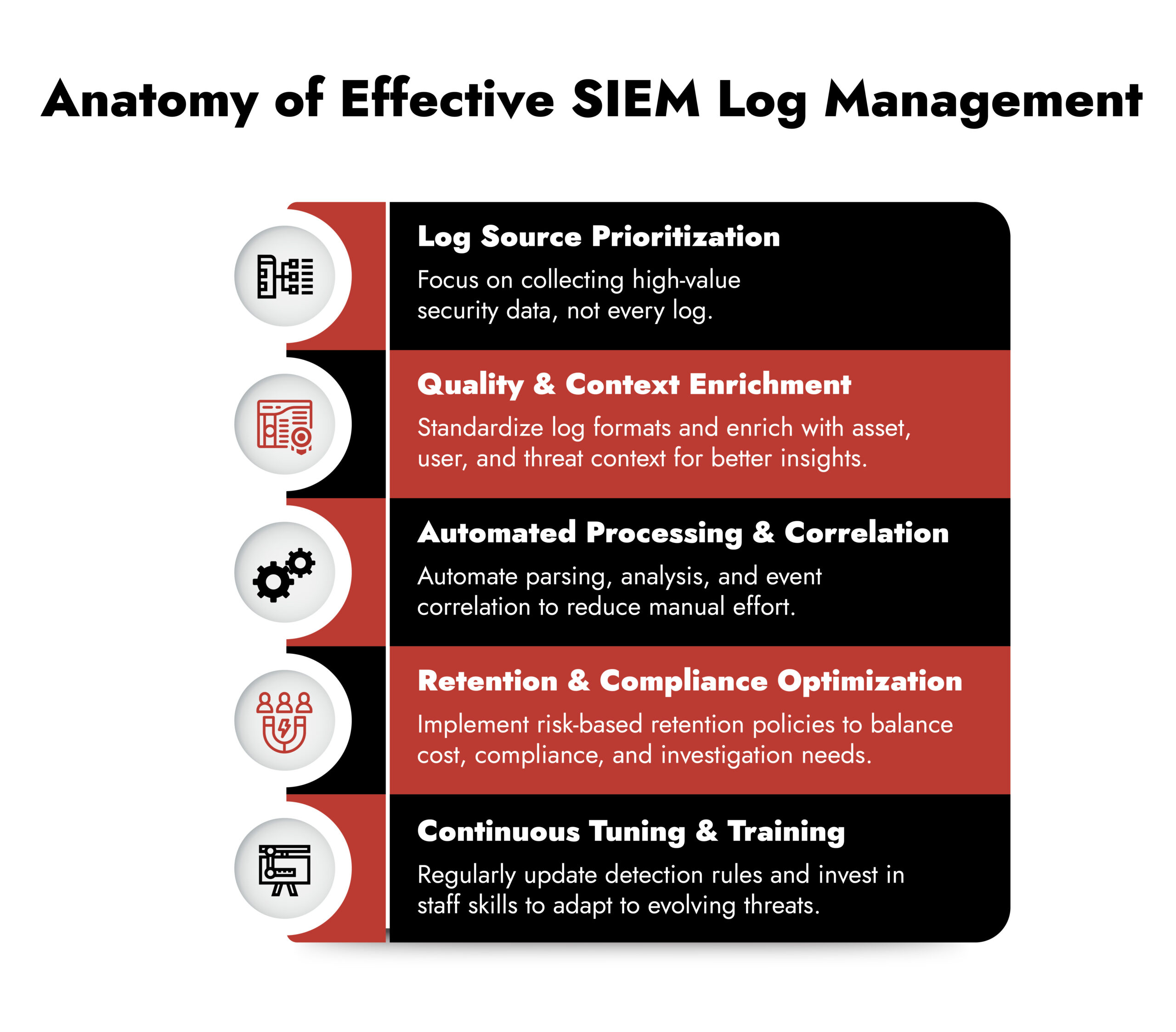

Effective log management system requires understanding which sources provide actionable intelligence. Authentication logs reveal credential attacks. Network flow data exposes unauthorized communications. Endpoint telemetry identifies malicious processes. Meanwhile, routine maintenance events rarely contribute to threat detection and response.

Why Volume Doesn’t Equal Security Value

Undifferentiated collection creates cascading operational problems:

- Storage explosion: Systems consume expensive resources storing irrelevant data

- Processing bottlenecks: Platforms struggle analyzing massive volumes of low-value information

- Investigation delays: Critical events get buried in operational noise

- Analyst burnout: Teams receive overwhelming alerts with minimal security relevance

Smart organizations implement classification systems that prioritize security-relevant data while filtering operational logs with minimal threat detection value.

Mistake #2: Insufficient Log Retention and Protection

Compliance Blind Spots That Cost Millions

Organizations establish retention policies based on storage costs rather than security and regulatory requirements. This approach creates dangerous gaps during investigations and exposes enterprises to regulatory penalties. Advanced threats maintain persistence for months, requiring historical data for complete attack reconstruction.

Compliance frameworks specify minimum retention periods for different data types. Financial services need multi-year transaction log retention. Healthcare organizations must preserve patient data access trails. Insufficient retention prevents legal discovery compliance and comprehensive threat analysis.

Building Defensible Data Strategies

Effective retention balances compliance requirements with operational efficiency:

- Risk-based classification: Teams categorize logs by security value and regulatory necessity

- Automated lifecycle management: Systems archive or compress data based on predefined policies

- Legal hold capabilities: Platforms preserve specific datasets during investigations

- Tiered storage optimization: Organizations move older data to cost-effective storage media

Mistake #3: Manual Log Analysis and Lack of Automation

The Analyst Bottleneck Crisis

Security teams cannot manually process the log volumes that modern IT environments generate. Organizations relying on manual analysis create significant bottlenecks that delay threat detection and response. Analysts spend excessive time on routine parsing, correlation, and investigation tasks.

Manual processes introduce human error and inconsistency. Different analysts reach different conclusions analyzing identical data. During high-pressure incidents, manual analysis becomes even more error-prone and time-consuming.

How Automation Transforms Security Operations

Intelligent automation shifts log management system from reactive to proactive:

- Automated parsing: Systems extract relevant fields and normalize formats without human intervention

- Behavioral analysis: Machine learning identifies anomalies and suspicious patterns automatically

- Threat correlation: Platforms connect related events across different log sources

- Response orchestration: Workflows execute containment actions for confirmed threats

Automation enables analysts to focus on strategic activities like threat hunting and investigation rather than routine data processing.

Mistake #4: Neglecting Log Quality and Context

How Poor Data Quality Undermines Detection

Incomplete logs, inconsistent formatting, and missing context create exploitable blind spots. Organizations discover quality issues during critical investigations when incomplete information prevents effective response. Attackers exploit these gaps to maintain persistence and avoid detection.

Common quality problems include truncated entries, missing timestamps, inconsistent field names, and incomplete event descriptions. These issues compound when logs originate from diverse sources with different formats and conventions.

Context Enrichment That Drives Better Decisions

Raw log data rarely provides sufficient context for security decisions. Enrichment processes transform basic events into actionable intelligence:

- Asset context: Systems add criticality, ownership, and business function information

- User behavior: Platforms compare current activities against historical patterns

- Threat intelligence: External feeds provide attack context and attribution data

- Vulnerability data: Integration reveals exploitable weaknesses that attackers target

Context enrichment enables teams to prioritize alerts, assess potential impact, and execute informed response decisions rapidly.

Mistake #5: Lack of Continuous Tuning and Staff Training

The Performance Degradation Cycle

SIEM platforms require ongoing tuning to maintain effectiveness. Organizations that deploy systems without continuous optimization experience gradual performance degradation. Detection rules become outdated, new threats evade legacy signatures, and false positive rates increase over time.

Environmental changes compound tuning challenges. New applications, infrastructure modifications, and business process updates alter log patterns. Without continuous tuning, systems become less effective and more expensive to operate.

Building Expertise for Long-Term Success

Staff training represents a critical investment in siem log monitoring success. Security teams need deep understanding of log sources, detection techniques, and investigation methodologies. Training programs must cover:

- Log source expertise: Teams learn what each system logs and how to interpret data

- Detection methodology: Analysts master techniques for identifying threats within large datasets

- Investigation skills: Teams develop systematic approaches to security incident analysis

- Platform proficiency: Staff leverage advanced security information and event management capabilities effectively

Regular training ensures teams exploit platform capabilities fully while adapting to evolving threats.

Mistake #6: Blind Reliance on AI Without Environmental Tuning

AI isn’t a fix-all. It’s a tool—and like any tool, it fails without the right inputs.

Modern SIEM platforms increasingly rely on AI for correlation, detection, and even response. But here’s the problem: these systems are only as good as the logs they ingest and the environment they understand.

Many organizations assume that more data equals smarter AI. In reality, overfeeding irrelevant or low-context logs overwhelms AI models and floods teams with weak alerts. Even worse, generic AI engines often miss subtle, environment-specific threats because they weren’t trained on your infrastructure or behaviors.

And when AI becomes the crutch, analysts lose touch.

Manual threat hunting, log investigation, and compromise assessment are becoming rare skills. SOC teams are trusting automation until a breach forces them to realize: no machine can fully replace human judgment in high-stakes scenarios.

What this really means is:

- Selecting the right logs matters more than ever

- AI correlation must be tuned to your operational environment

- Analysts still need to understand the raw data—not just rely on summarized alerts

This isn’t an anti-AI message. It’s a call for smarter implementation—where technology enhances human expertise, not replaces it.

Transform Your SIEM Log Management Strategy with NetWitness

The six mistakes above don’t just happen—they persist because most SIEM tools aren’t built to solve them. NetWitness is.

- Drowning in log noise?

NetWitness supports 350+ log sources and uses dynamic parsing to prioritize security-relevant data—so you capture what matters, not everything. - Retention gaps and compliance risks?

Built-in templates for HIPAA, PCI DSS, SOX, and more make compliance easier. Tiered storage and legal holds ensure critical logs are never lost. - Manual analysis slowing detection?

With behavioral analytics, automated correlation, and real-time enrichment, NetWitness slashes investigation time and alert fatigue. - Poor log quality and lack of context?

NetWitness enriches logs with asset, user, and threat intel data—turning incomplete entries into actionable alerts. - Outdated rules and undertrained teams?

Prebuilt use cases, flexible reporting, and analyst-friendly dashboards keep your SIEM log management tuned and your team in control.

Bonus: It works across on-prem, hybrid, and cloud—including AWS, Azure, and Office 365—so your visibility scales with your infrastructure.

Address these common mistakes and implement strategic log management practices. Transform your SIEM platform from a cost center into a powerful security asset that provides real protection against modern threats.

Frequently Asked Questions

1. What makes certain log sources more valuable for SIEM log management?

Not all logs are equal. NetWitness focuses on high-value sources—like authentication attempts, network flows, and endpoint activity—and uses dynamic parsing to extract and enrich metadata in real time. This ensures you’re not just collecting logs, but turning them into meaningful security signals.

2. How does NetWitness help manage log retention for compliance?

NetWitness provides prebuilt compliance templates for regulations like PCI DSS, HIPAA, and SOX. It supports tiered storage, automated lifecycle management, and legal holds—making it easier to retain the right logs for the right amount of time without unnecessary overhead.

3. How does NetWitness reduce false positives in automated SIEM log monitoring?

The platform combines behavioral analytics, threat intelligence enrichment, and context-aware correlation to minimize noise and surface high-confidence alerts. Analysts get fewer false alarms—and more time to focus on real threats.

4. How can organizations improve log quality and consistency with NetWitness?

NetWitness uses patented parsing and indexing to standardize logs at ingestion. It automatically adds context like asset role, user behavior, and known vulnerabilities, so your team isn’t working with raw, fragmented data during investigations.

5. Does NetWitness offer support or services for tuning and optimization?

Yes. In addition to in-product tools like custom rule building, reporting dashboards, and tuning workflows, NetWitness offers Professional Services to help organizations optimize detection logic, log source onboarding, and investigation processes for long-term success.